Visual Servoing of Aerospace Vehicles

Unmanned space vehicles are

increasingly being used to perform both science, surveillance, inspection and monitoring missions. Their levels of autonomy range from being fully tele-operated to being fully autonomous and only requiring very high-level mission commands. The greater the human-directed control required by an unmanned vehicle, the larger the support crew will need to be.

increasingly being used to perform both science, surveillance, inspection and monitoring missions. Their levels of autonomy range from being fully tele-operated to being fully autonomous and only requiring very high-level mission commands. The greater the human-directed control required by an unmanned vehicle, the larger the support crew will need to be.

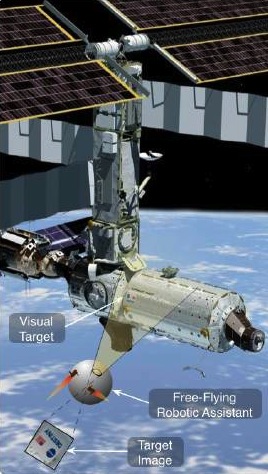

The AVS Lab is researching semi-autonomous visual sensing, navigation and control techniques of a free-flying craft/end-effector relative to another spacecraft. Consider the scenario where an unmanned space vehicle is to operate in close proximity to a larger craft as illustrated in figure on the right. The target craft can be either collaborative or non-collaborative in this effort.

To measure the relative motion of the trailing vehicle with respect to the lead vehicle, a range of sensing approaches can be employed from stereo camera pairs, monocular vision, active visual scanning, thermal images, etc. A passive visual sensing approach is investigated where a video camera is used to see the target spacecraft. A distinct passive visual feature is then tracked in real time. Note that the target is not emitting an electronic signature as active optical beacon do, thus reducing the overall signature emission of the vehicles. Rather, these targets would be uniquely colored shapes that are tracked robustly. One such sensing example is the visual snake algorithms that can rapidly segment an image and track a desired feature.

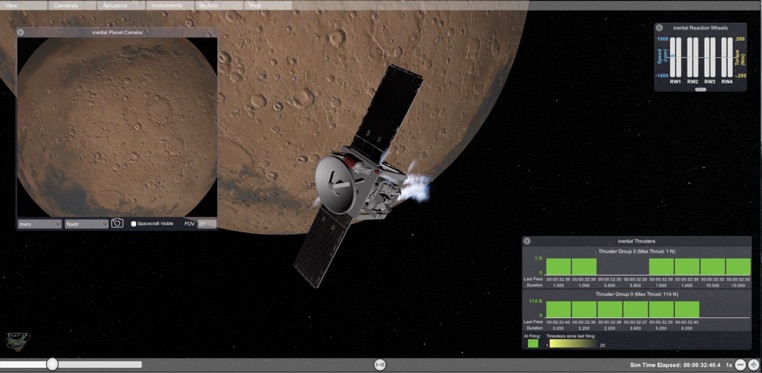

Current work is investigating developing an open visual spacecraft control software architecture. Here the Basilisk Astrodynamics Simulation Framework or BSK for short is used to model the spacecraft motion, and a three-dimensional BSK visualization is used to generate a synthetic camera image sequence. This allows for visual closed-loop control scenarios to be simulated purely in software. The camera images can be corrupted to match real images using tools such as OpenCV. Key aspect of this simulation environment is that image of arbitrary fidelity, size and color space can be simulated without loosing synchronization with the separate BSK simulation.

Current work is investigating developing an open visual spacecraft control software architecture. Here the Basilisk Astrodynamics Simulation Framework or BSK for short is used to model the spacecraft motion, and a three-dimensional BSK visualization is used to generate a synthetic camera image sequence. This allows for visual closed-loop control scenarios to be simulated purely in software. The camera images can be corrupted to match real images using tools such as OpenCV. Key aspect of this simulation environment is that image of arbitrary fidelity, size and color space can be simulated without loosing synchronization with the separate BSK simulation.

This visual spacecraft sensor modeling capability is being used to research autonomous deep space navigation using celestial objects and a sun heading sensor. The framework can also be used to do visual spacecraft, lunar or asteroid relative motion sensing.